The emerging use of Artificial Intelligence (AI) in social security institutions is enabling more proactive and automated social security services. Nevertheless, the application of AI in social security is also challenging, and institutions are defining how they can concretely take the most advantage of this new technology. This article introduces to AI and describes some application experiences in social security based on discussions held in the ISSA European Network Webinar: Artificial intelligence for social security institutions as well as on other material.

Background on AI – Myths, Reality and Risks

In 1950, Alan Turing in his ground-breaking essay asked the simple question “Can machines think?” If a machine can think it can behave intelligently – and perhaps one day surpass the intelligence of the human creators as well. This idea of “superintelligence” has been a potential source of inspiration for many science fiction stories.

Why, and why not, AI?

There is an international competition – The Loebner Prize – that annually awards prizes to computer programmes that are most human-like. To date, there has not been a winner that has truly passed the test, and scientists are far from designing artificial superintelligence. In reality, decades may be needed to achieve the capacity to manifest the capability to build artificial general intelligence (AGI), which refers to AI that is human-like. Current approaches can generally be termed as ”narrow AI” – systems that are intelligent not because they achieve to imitate human intelligence but because they can carry out tasks that would otherwise require human intelligence, time, and effort to an unsustainable extent. These AI algorithms are gradually replacing and complementing traditional algorithms that solved problems through computers.

The algorithmic processes of AI are unlike traditional ones and can be more efficient for many tasks. In the case of AI, instead of writing a programme for each specific task, many examples are collected that specify the correct (or incorrect) output of a given input. AI algorithms then take these examples and produce a programme that does the job. The programme should be scalable for new cases. If the data changes the programme can change too by getting trained on the new data. Massive amounts of computational power are now available for these tasks and that is why it is cheaper than writing a task-specific programme.

This capability of scalability and harnessing insights from data has made AI an essential and complementary tool for policymakers and service providers aiming to achieve social good. Various AI tools are being used for crisis response, economic empowerment, alleviating educational challenges, mitigating environmental challenges, ensuring equality and inclusion, promoting health, reducing hunger, information verification and validation, infrastructure management, public and social sector management, and even security and justice.

The multidisciplinary nature of AI

While currently AI can be defined as “narrow AI”, the approach of AI algorithms is to imitate the process of human thinking. This includes modelling how humans actually think and act. Designing AI algorithms require understanding human reasoning, what humans do and how they act. This also includes modelling how an ideal human should think and act. These aspects are understood through the knowledge of rational and ethical reasoning. Modern AI focuses on making AI that knows how it “should act”. The success of these algorithms is judged by performance measures adapted from the fields of statistics, physics, mathematics, philosophy, economics, social science, neuroscience, and cognitive science. This is why building a workable AI algorithm needs an interdisciplinary approach, which is only about to emerge.

Under the umbrella of AI there are a number of disciplines. These disciplines ask questions such as – can computers talk? recognize speech? understand speech? learn and adapt? see? plan and make decisions? And to answer these queries a number of subdivisions of AI have emerged and evolved – natural language processing, computer vision, machine learning, data mining and many more. The last few years have seen a surge of new methods and techniques such as convolutional neural networks that promises high performance and devours massive data. These algorithms, in general, follow a pipeline that starts with data acquisition and then follows a number of steps: data-cleaning, storage and management, feature selection and data wrangling, visual analysis, iterative process of modelling, performance measurement, monitoring, and at the end producing an output. Throughout these processes, the data used are split into different parts to train, validate, and test the performance of the models based on the data at hand. This process is somewhat similar to how humans process data.

Risks

As AI algorithms go through sub-processes, there are a number of areas to be careful about. One such thing is overfitting. Sometimes, the designed algorithms fit the training dataset so well that they fail to give the right solution in the real world. Apart from these considerations, data, algorithms, and human interaction in an algorithm can be potential sources of bias that can be a reason for AI failure. A massive amount of data are fed into the machine to recognize certain patterns. Unstructured data from the web, social media, mobile devices, sensors, smart devices (i.e. Internet of Things) make data absorption, linking, sorting, and manipulation difficult. If data is not carefully curated the dataset may be fraught with incomplete or missing data or may be inaccurate or biased. This may even cause an inadvertent revelation of sensitive data unless care is taken to remove personal data from all datasets.

AI systems are prone to algorithmic bias. Some algorithms are systematically biased towards a specific type of data. Apart from that, the bias depends largely on the engineers who design the algorithms, how these are deployed, and how they are ultimately used. A lot depends on how a problem is framed. While framing a problem, scientists decide what they actually want to achieve when they create a learning model. An algorithm designed to determine “creditworthiness’’ that is programmed to maximize profit instead of maximizing the number of loans could ultimately but unintentionally decide to give out predatory subprime loans. Even the composition of the engineers can be biased. A problem framing depends on who designs it, who decides how it is deployed, who gets to decide the acceptable level of accuracy, and who decides if the applications of AI are ethical. Failure in addressing these issues have proliferated the algorithms that dictate what political advertisements people see, how recruiters screen job seekers, and even how security agents are deployed in neighbourhoods.

Towards an ethical use of AI

Although AI as a science dates long back, the use of AI in the tech world is relatively new, and hence many market leaders are falling behind to develop the intuition and working knowledge about individual societal and organizational risks. Some tend to overlook the perils and some overestimate the risk mitigation capabilities. Moreover, there is not much consensus among executives regarding what risks to anticipate. Many companies argue that they have taken precautions – they are gathering more repetitive training data, regularly auditing and checking for unintended biases, and conducting impact analysis against certain groups. However, many AI ethics researchers argue that AI algorithms are trained on old data. An algorithm that performs fairly on an old dataset may not result in a system that will perform fairly in reality. Ethics in AI has therefore become a key research topic.

Application experiences in social security

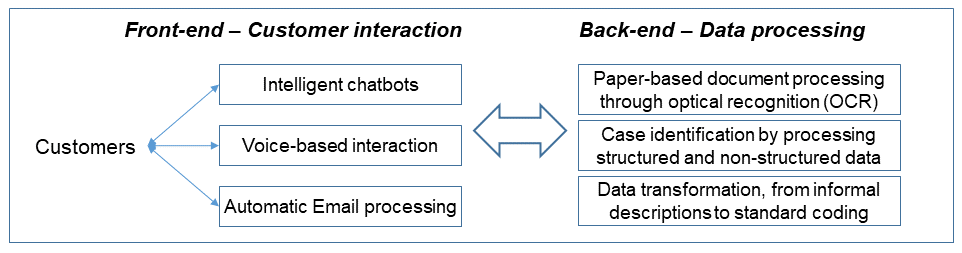

There is a growing trend in social security to apply AI, particularly to improve customer services through automated 24/7 front-end support and also, more incipiently, automating back-end processes (Figure 1).

Several social security institutions have implemented intelligent chatbots to improve online customer services through quality 24/7 availability in different branches and types of benefits. Intelligent chatbots can simulate human behaviour and are able to respond autonomously to users' inquiries. They are available 24/7, and can adapt to users' preferences.

The Superintendency of Occupational Risks (Superintendencia de Riesgos del Trabajo – SRT) of Argentina implemented an intelligent chatbot called "Julieta" to respond to inquiries about work injury benefits. The chatbot fulfilled the objectives of providing automated and personalised customer services by responding not only to the most frequent questions but also by inquiring about the status of costumers' operations such as enrolment and benefit applications. Some of the identified key factors include the development of a quality knowledge-base and the permanent training of the chatbot involving a multidisciplinary team.

In turn, the intelligent chatbot implemented by the Norwegian Labour and Welfare Administration (NAV) has enabled the response to an increased demand for information in the context of the COVID-19 crisis. Concretely, during the period March to May 2020, the chatbot responded to more than 8,000 daily inquiries, which compares to a pre-COVID number of 2,000. The key success factors were the chatbot training based on a daily updated knowledge-base, the focus on a specific type of information, and a seamless connection from the chatbot to a human expert. The chatbot is being extended to new topics, and notably to support employers and the self-employed.

In Uruguay, the Social Insurance Bank (Banco de Previsión Social – BPS) implemented an intelligent chatbot to respond to employers’ inquiries about the scheme for domestic workers. By applying natural language processing and dialogue management techniques, the chatbot understands the customer’s intentions and suggests appropriate actions. Deployed in January 2019, the intelligent chatbot currently enables to respond to 97 per cent of all inquiries while the remaining 3 per cent involve an expert staff. The implementation time was about one year, with six months devoted to training and testing. The identified key facilitating factors have been the permanent update of the knowledge-base as well as the development and operation through a multidisciplinary team.

The General Organization for Social Insurance (GOSI) of Saudi Arabia launched an experimental use of intelligent chatbots for service delivery. The objective was to develop an intelligent agent to respond to customers’ inquiries and to simplify certain services and transactions. The agent communicates with customers through different chat and social networking applications.

Some institutions are also using AI to improve back-end processes, notably to process large volumes of data comprising traditional databases as well as unstructured text and images of digitalized paper-based documents.

Employment and Social Development Canada (ESDC) applied AI to identify beneficiaries of the Guaranteed Income Supplement (GIS), which is a cash benefit targeting low-income old-age persons. In two months, Machine Learning models identified over 2000 vulnerable Canadians to be entitled to the GIS by processing more than 10 million records of unstructured text data. In order to maximize the coverage of vulnerable beneficiaries, the business experts of the GIS programme decided that the model should have a high degree of inclusion and intentionally accepted false positives that would have to be reviewed manually.

The experience showed the importance of using representative data and capturing nuances as well as determining the adequate metrics and thresholds for the business needs by building the training dataset together with business experts. As lessons learned, the ESDC highlighted that the quality of the underlying data is crucial and that AI projects require multidisciplinary teams with data scientists and business experts. The main identified risks comprised the selection of proper tools and the data literacy gaps among the staff in the organization.

Finland’s Social Insurance Institution is starting to apply AI in two ways: (i) improving customer services by combining e-services with intelligent chatbots, and (ii) using AI-based image recognition to automate administrative processes by recognizing documents.

Similarly, the National Social Security Institute of Brazil (INSS) is implementing an intelligent chatbot – called Helô – for providing automated responses 24/7 to customers’ inquiries in the context of the myINSS personalised e-services. A first version deployed in May 2020 has already processed about a million of inquiries. The INSS is also using AI to speed up beneficiary death detection, which allows for preventing undue payments.

Likewise, the Auxiliary Unemployment Benefits Fund of Belgium (Caisse auxiliaire de paiement des allocations de chômage – CAPAC) carried out preliminary AI application to process paper forms through Optical Character Recognition (OCR), which however did not lead to satisfying results. Despite these difficulties, CAPAC keeps AI applications on the agenda and plans to develop an intelligent chatbot.

In turn, the Austrian Social Insurance (Dachverband der österreichischen Sozialversicherungsträger – SV) is applying AI for multiple purposes. Firstly, to deploy an intelligent chatbot – OSC Caro – which provides digital assistance to customers in various areas such as childcare allowances, sick pay and reimbursements. In addition, a voice recognition system supports call centre services by automatically forwarding customer inquiries to the corresponding offices. The system’s language model, which is based on AI, was trained to recognize specific terms. Furthermore, AI is also used to implement the automatic dispatching of emails to the corresponding departments with up to 93 per cent of accuracy. Finally, an ongoing project is implementing an AI-based semi-automatic reimbursement process of medical services fees. In this case, AI is applied to automate several tasks such as the recognition of the submitted documents, encoding diagnosis using the standard ICD-10, and extracting required data for the reimbursement (e.g. invoice amount, IBAN). This semi-automatic treatment enables to speed-up the reimbursement process as well as support the involved staff.

At the governmental level, several countries are defining national strategies on Artificial Intelligence. In particular, the Estonian strategy aims at enabling a proactive government based on a life-event service design and delivering personalized services with zero bureaucracy through an intensive application of AI.

The Estonian vision of AI-based digital public services is being put into practice through #KrattAI, which is an interoperable network of AI applications enabling citizens to use public services through voice-based interaction with virtual assistants. The more than 70 ongoing projects under this strategy, with 38 already operational, cover a wide range of areas including environmental applications, emergency support, cybersecurity and social services. In particular, an intelligent chatbot for customer services and processing long-term unemployment risk cases is applied in the context of unemployment insurance.

The lessons learnt comprise ensuring the quality and privacy of the involved data as well as providing metadata, and managing scalability of AI-based applications by using cloud infrastructure and developing adequate procurement models. Furthermore, the limits of automation for public services have to be correctly assessed.

Conclusions and key takeaways

Artificial Intelligence is gradually becoming a key technology for social security organizations as it enables to increase administrative efficiency by automating processes as well as to assist staff in tasks requiring human decisions.

However, although positive developments can be observed, several challenges also arise. These relate especially to the limitations and risks of AI, and the trade-off between process automation versus human control. Furthermore, the methodological differences between AI and traditional software development pose challenges to institutions carrying out the projects.

Among the critical factors, data availability and quality are highlighted as a must in order to train the AI systems appropriately. Such "data needs" require establishing an organizational strategy to use internal data as well as potentially data from other organizations, and also involves assessing the compliance with data protection regulations.

AI adoption requires specific institutional capacities. Institutions need to have a detailed understanding of the goal of the project, select data that is representative of the real world, choose simple solutions, pay special attention to explainability of the algorithms used, choose models that not only have best results but also pass fairness standards that need to be carefully designed, and finally ensure transparency to ensure accountability.

In addition, institutions applying AI emphasised the importance of having projects developed by multidisciplinary teams involving business staff and data scientists. In this line, staff literacy on AI and data management also becomes a key factor. Business owners and project managers have to understand the implications of AI application in order to define what processes could be automated and which decisions must be in human hands.

The ISSA expresses its gratitude to Moinul Zaber, Senior Academic Fellow, Operating Unit on Policy-Driven Electronic Governance, United Nations University, for his technical contribution to this article.

References

- ISSA European Network Webinar: Artificial intelligence for social security institutions, 22 September 2020

- Webinario de la AISS: Mejorando la atención al público a través de servicios en-línea avanzados, 18 June 2020

- ISSA Webinar: Enhancing efficiency and customer services through advanced process automation, 17 June 2020

- ISSA Webinar: Enhancing digital channels through advanced e-services, 4 June 2020